Make Every feature Binary: A 135B parameter sparse neural network for massively improved search relevance - Microsoft Research

PyTorch-Direct: Introducing Deep Learning Framework with GPU-Centric Data Access for Faster Large GNN Training | NVIDIA On-Demand

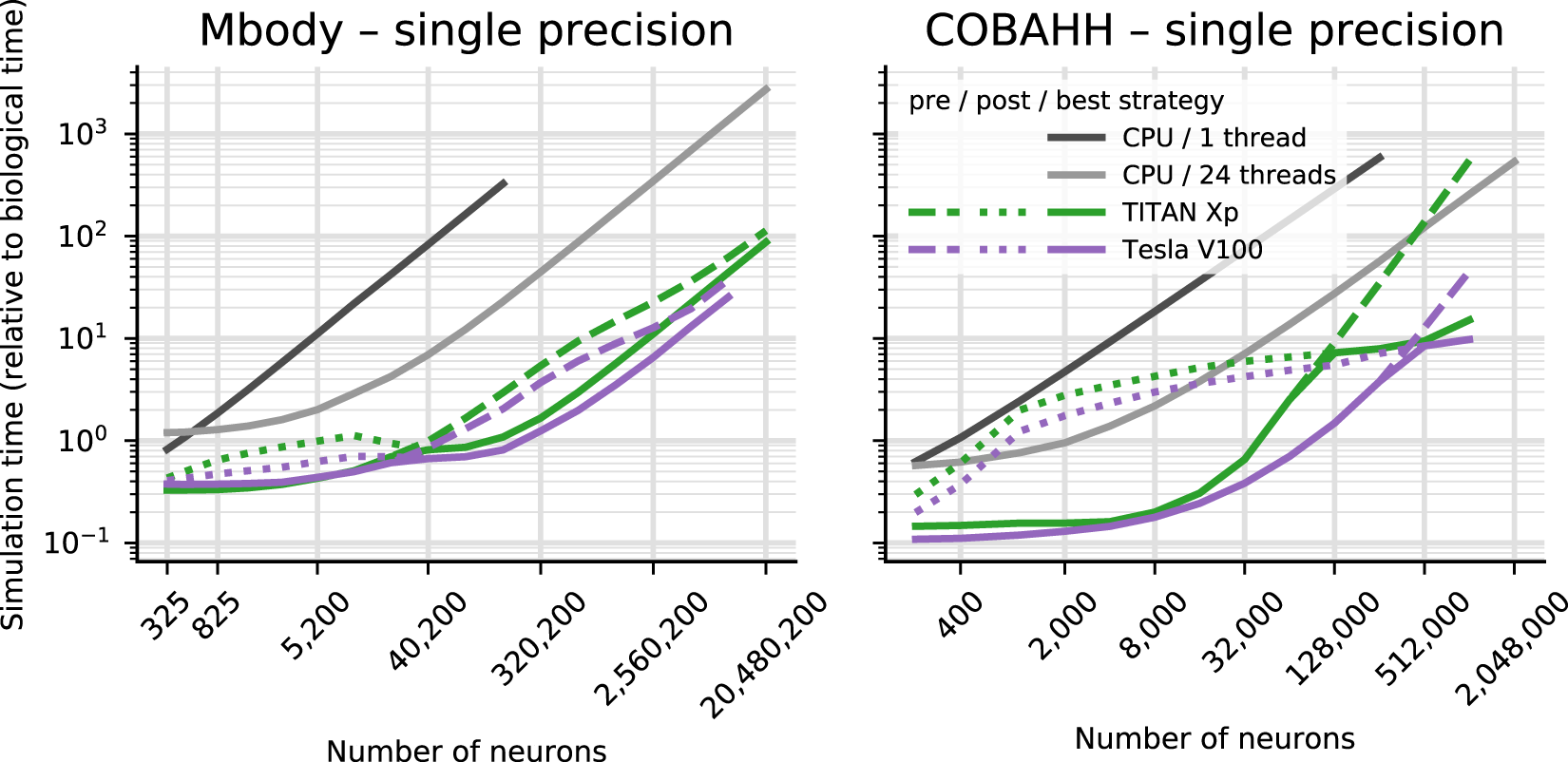

Brian2GeNN: accelerating spiking neural network simulations with graphics hardware | Scientific Reports

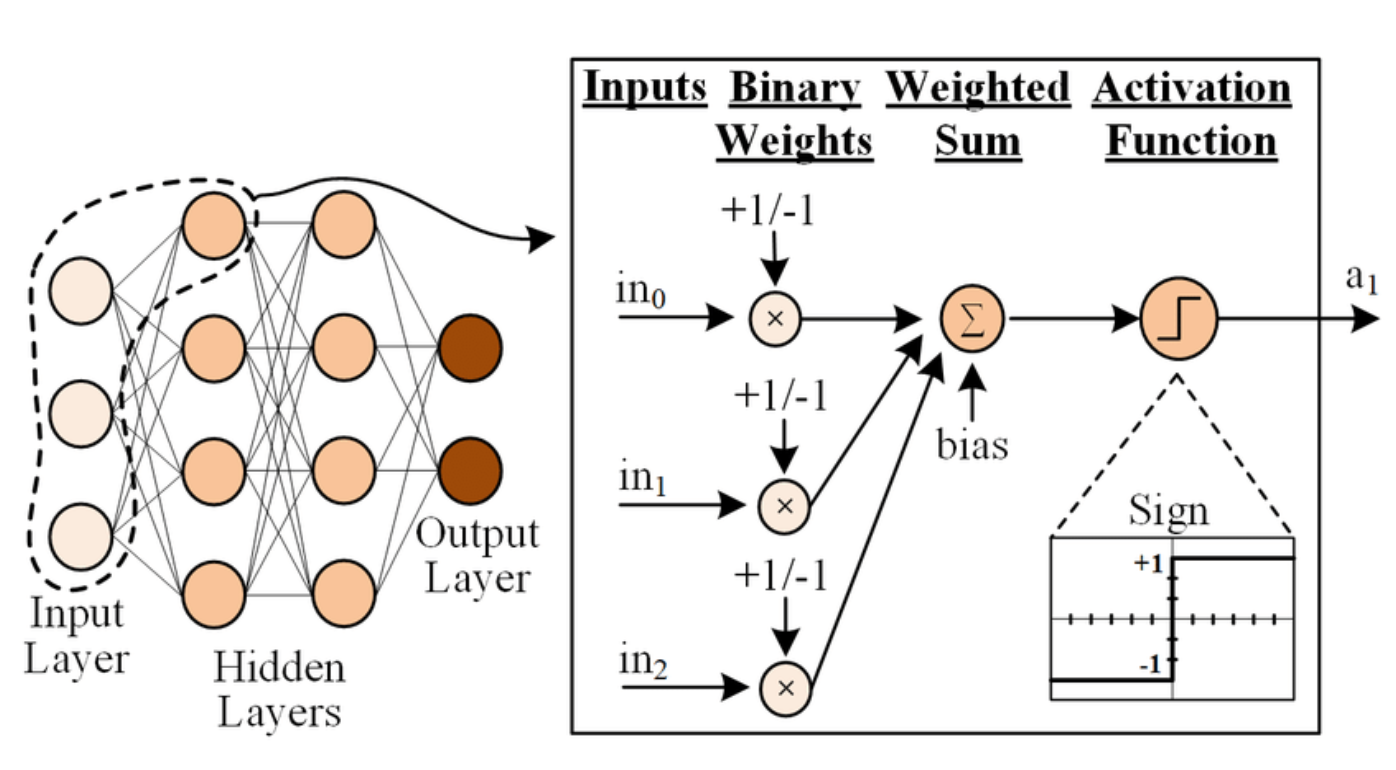

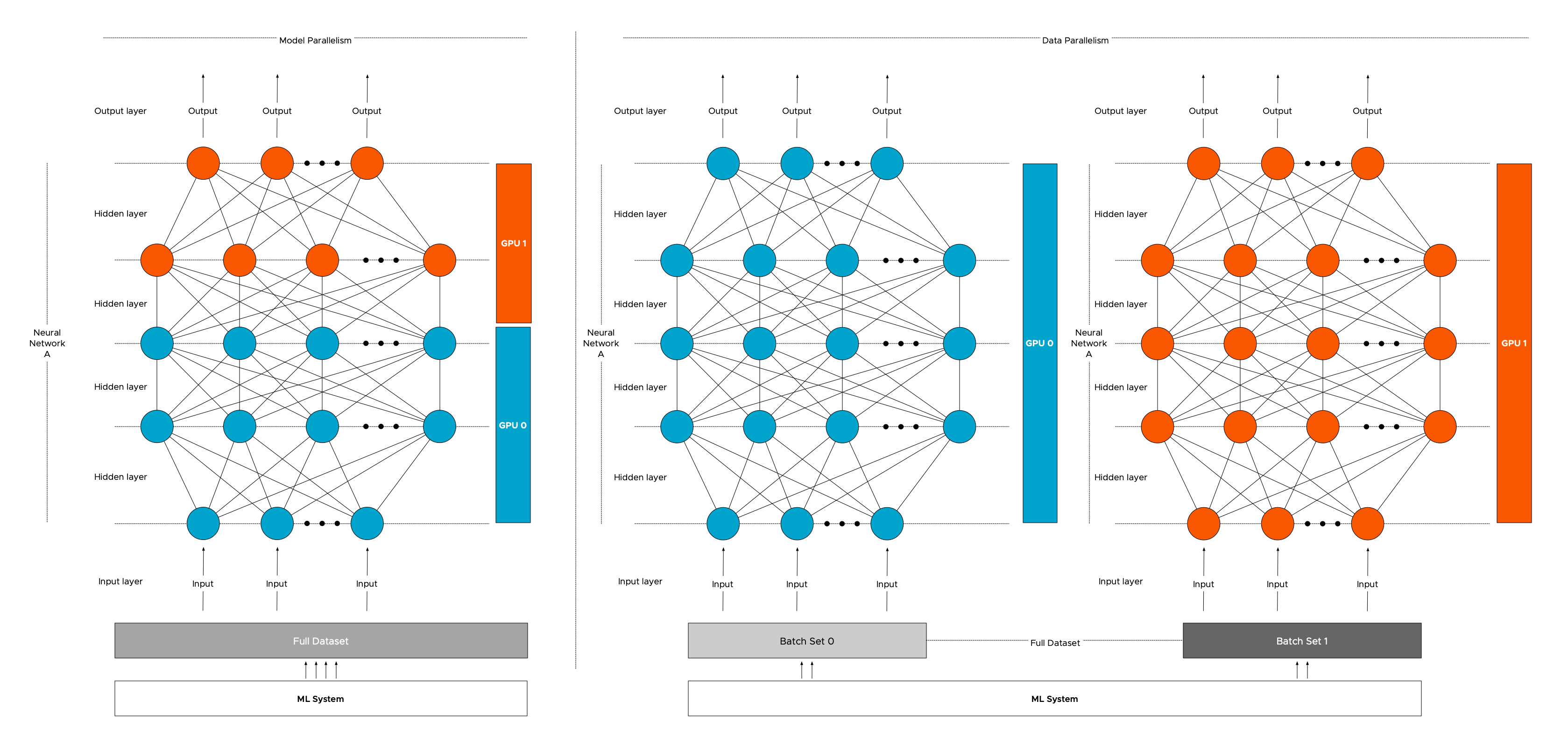

Multi-Layer Perceptron (MLP) is a fully connected hierarchical neural... | Download Scientific Diagram