![PDF] Distributed Hierarchical GPU Parameter Server for Massive Scale Deep Learning Ads Systems | Semantic Scholar PDF] Distributed Hierarchical GPU Parameter Server for Massive Scale Deep Learning Ads Systems | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/15b6fba2bfe6e9cb443d0b6177d6ec5501cff579/14-Figure7-1.png)

PDF] Distributed Hierarchical GPU Parameter Server for Massive Scale Deep Learning Ads Systems | Semantic Scholar

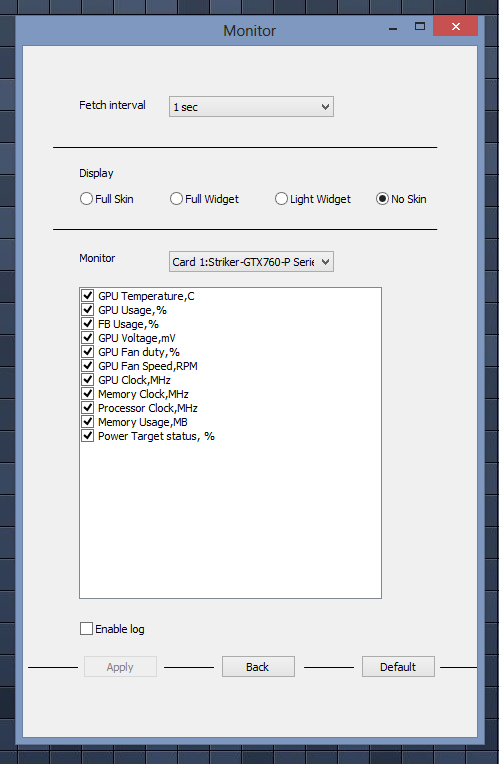

What kind of GPU is the key to speeding up Gigapixel AI? - Product Technical Support - Topaz Discussion Forum

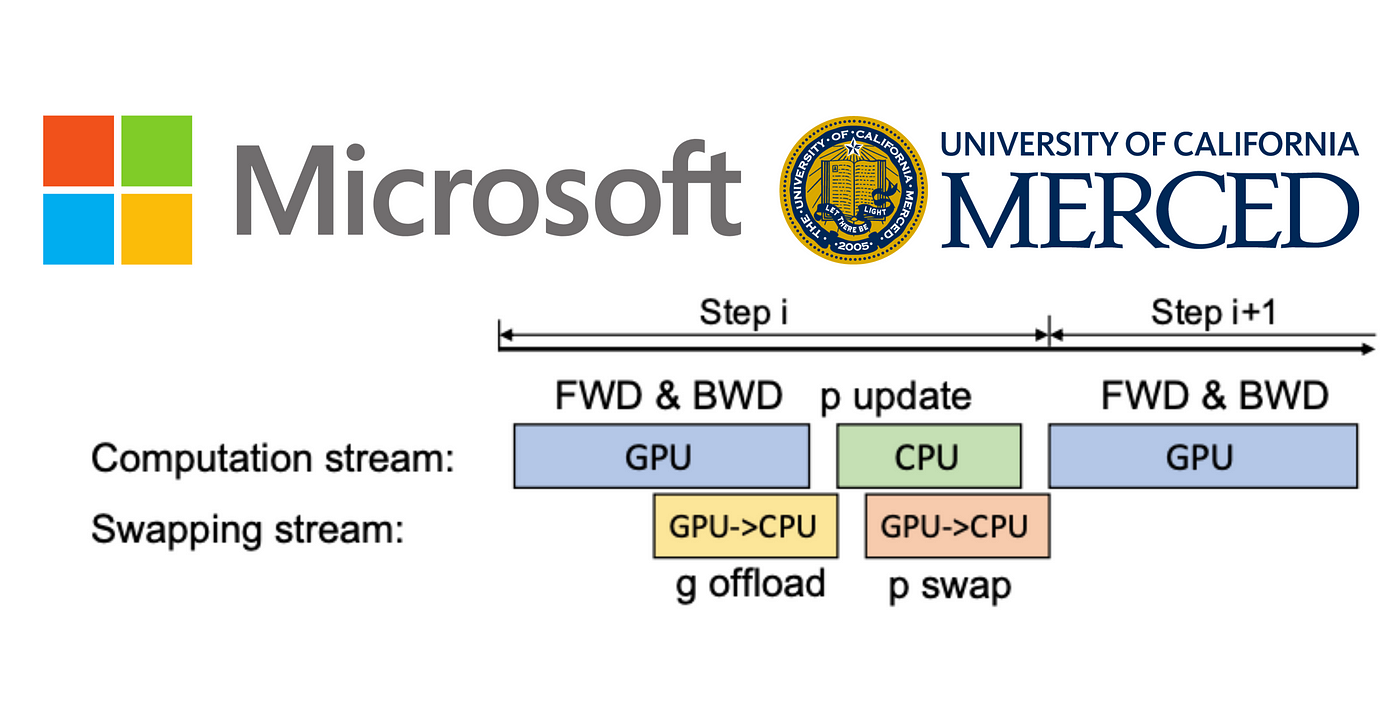

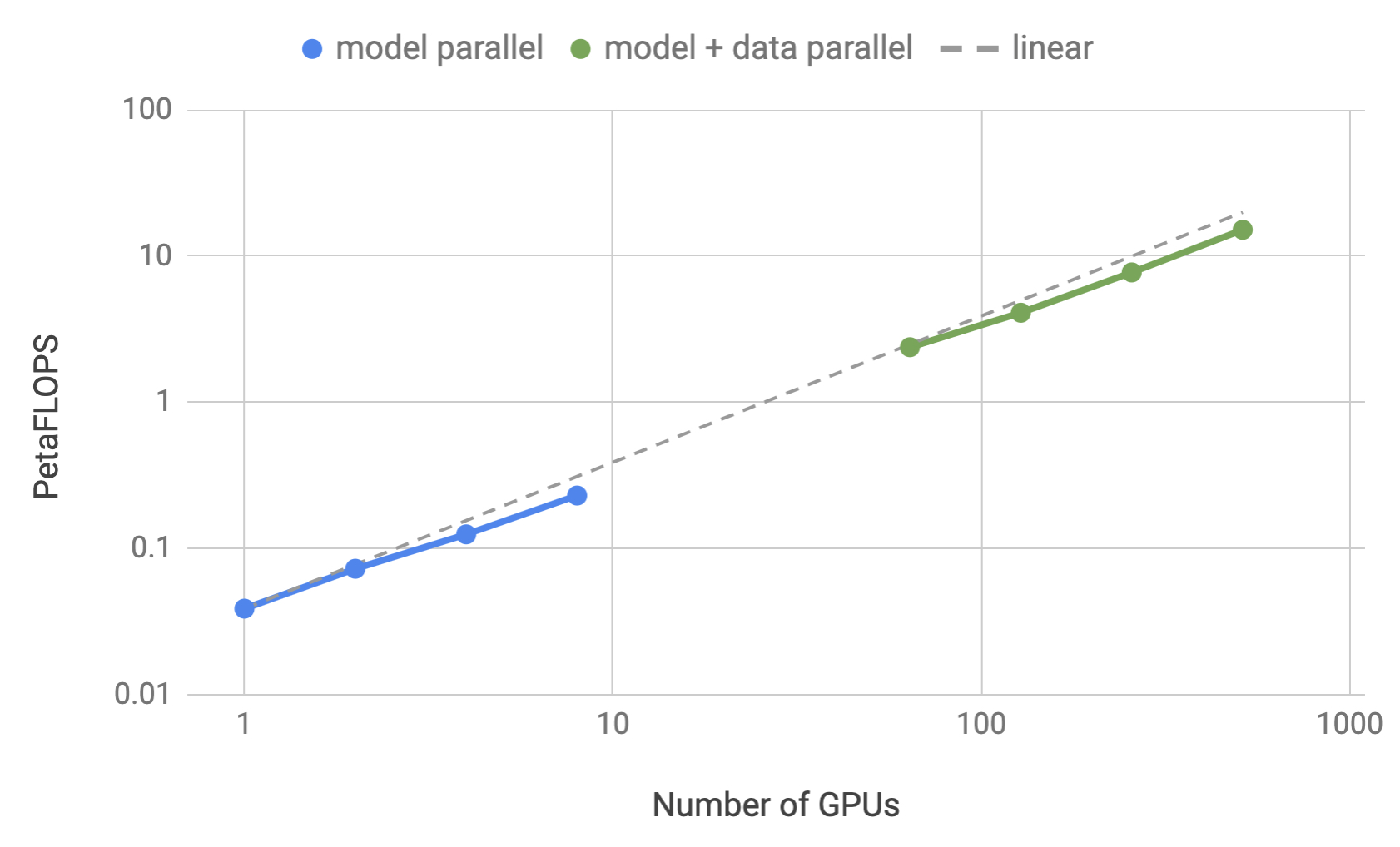

NVIDIA, Stanford & Microsoft Propose Efficient Trillion-Parameter Language Model Training on GPU Clusters | Synced

Using DeepSpeed and Megatron to Train Megatron-Turing NLG 530B, the World's Largest and Most Powerful Generative Language Model | NVIDIA Technical Blog

Number of parameters and GPU memory usage of different networks. Memory... | Download Scientific Diagram

Parameters of graphic devices. CPU and GPU solution time (ms) vs. the... | Download Scientific Diagram