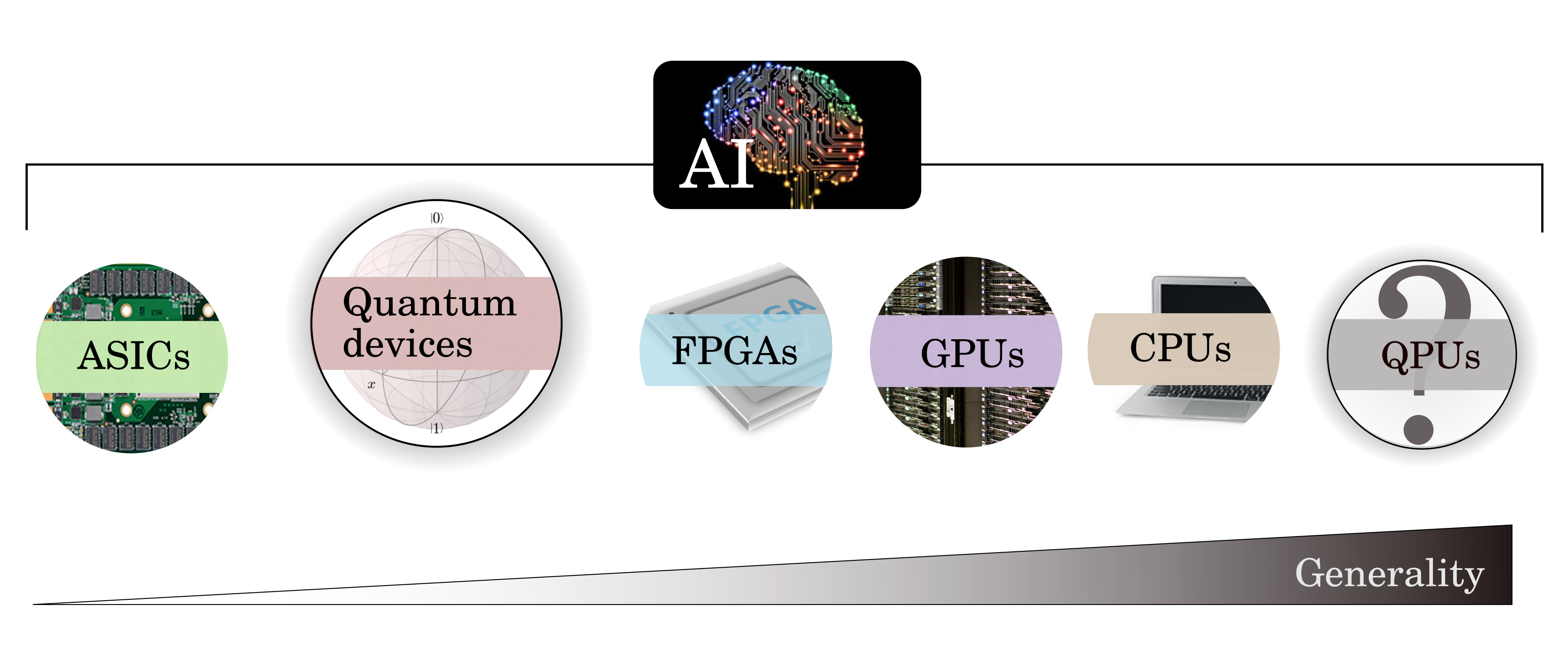

A complete guide to AI accelerators for deep learning inference — GPUs, AWS Inferentia and Amazon Elastic Inference | by Shashank Prasanna | Towards Data Science

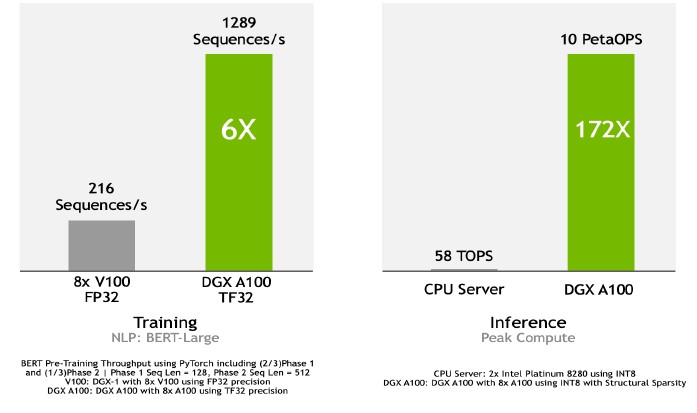

Twitter \ Bikal Tech على تويتر: "Performance #GPU vs #CPU for #AI optimisation #HPC #Inference and #DL #Training https://t.co/Aqf0UD5n7m"

How Good is RTX 3060 for ML AI Deep Learning Tasks and Comparison With GTX 1050 Ti and i7 10700F CPU - YouTube